16min reading time

Perhaps you have heard of companies like OpenAI, Arize AI, Clari or Databricks or tools like Dall-E or ChatGPT. All of these companies have three things in common: fast growth, high valuation and a core business build around AI. AI is bringing forward so many new companies and enables large growth that Forbes created an AI 50 – Companies to watch list, with the most innovative and fastest-growing AI companies! As we can already observe, AI seems to be adopted more and more, especially for high-tech companies.

But what about the rest? What about smaller, local and even medium-sized companies? What are the adoption challenges? How can these companies implement AI in an ethical but still performance-oriented way? This article will answer all of these questions and many more. As this article builds on our introduction to AI, please browse through our previous article about the topic if you haven’t read this one yet or if you are new to the topic of Artificial Intelligence overall.

Landscape of current AI technologies

Being the fastest-growing form of data-driven technology, AI continues to drive business impact by gaining even more autonomous functioning and transforming entire industries. AI adoption skyrocketed over the course of the last few years, with 86% of companies deeming it a mainstream technology as early as in 2021. With the growing popularity of AI, it is clear how data and AI technology become widely applied across the board of the business world. Real-time market analysis and insights, visual assistance and instant evaluation of numerous alternatives are just a few of many unmatched functionalities offered by AI when it comes to transforming work operations and even restructuring entire supply chains.

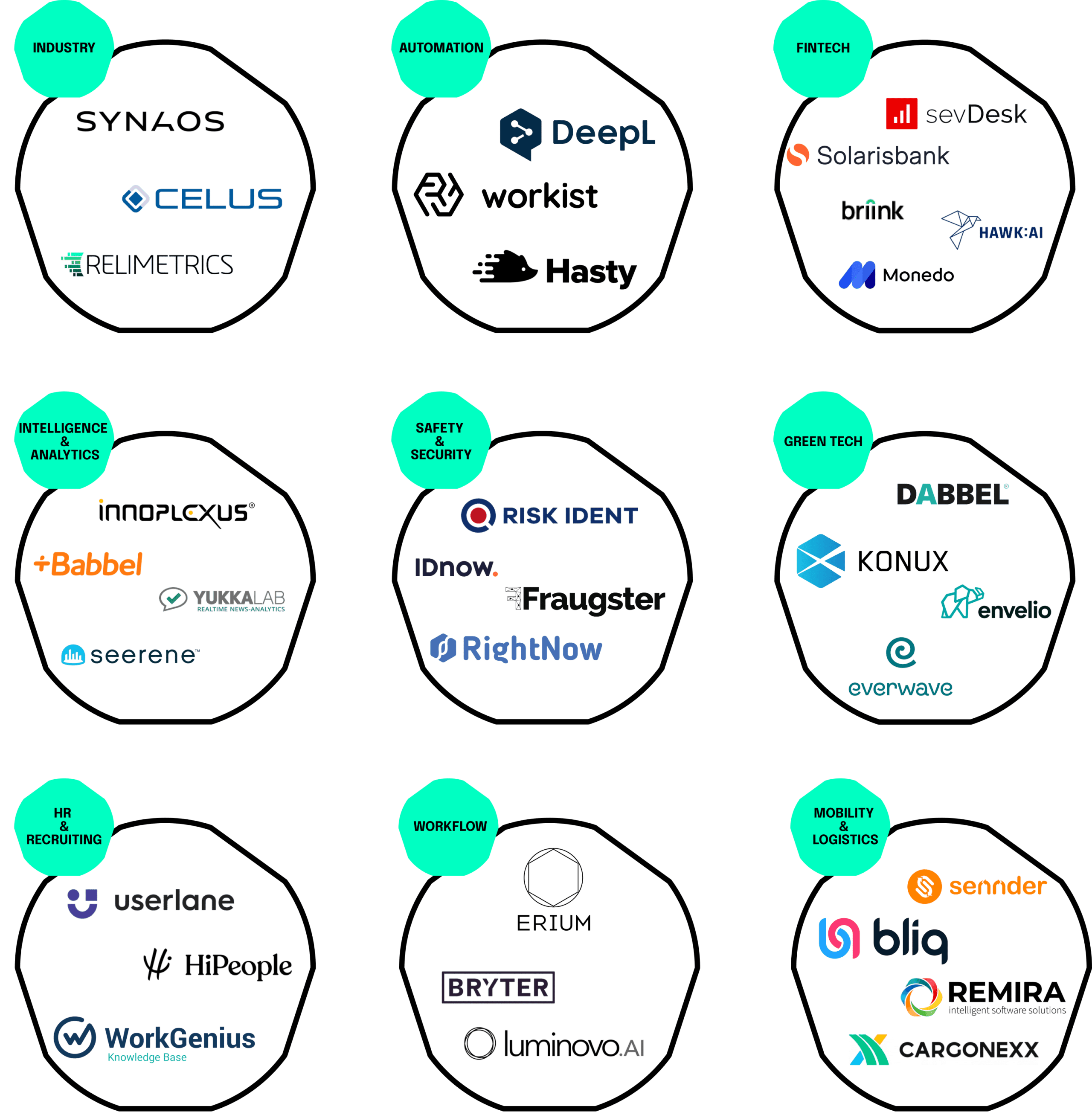

Rapid development of technology and continuous shift towards ultimate digitalization are two of the key factors transforming the global landscape. In certain countries, the impact of advanced machine learning and AI, as part of that shift, appears to be particularly extensive. For instance, in Germany nearly 23% of all the industrial companies use AI, moving a step closer towards emerging Industry 4.0 technologies. Evidently, AI, with big data and analytics, serves as a powerful wave of change impacting various German industries. As a projection for 2023 and beyond, this collective shift in the local business landscape will continue to be altered and accelerated by improved operational strategies and advanced capabilities introduced by AI.

When it comes to fostering and accelerating AI in Europe, Germany appears as the leader. It is predicted that by 2030 AI solutions will help Germany reach €430 billion in economic growth which corresponds to 11.3% increase in the country’s GDP, compared to 2019. As Europe’s largest economy with a thriving AI market, Germany is the leading nation behind EU’s vastest innovation centers, UnternehmerTUM. As part of this non-profit neutral platform for entrepreneurship and innovation, appliedAI is Germany’s biggest initiative for the application of trustworthy AI technology with the goal of brining the whole country into the AI age.

It appears essential to continue to drive AI adoption and foster more collaboration between startups and corporations, given that it is recognized as the most crucial technological developments and growth drivers of Germany’s economy. To shine more light on the AI startups in Europe, appliedAI has launched the annual German AI Startup Landscape, promoting more partnership opportunities with the help from academia, government, and industry.

Rocky Journey to AI adoption

Ⅰ. Data quality & Tech

Successful adoption of AI models requires quality data, yet most tech leaders recognize creating and managing the data sets as their biggest pitfall. While the open-source datasets might suffice when conducting trials, the fully-featured solutions require clean and organized data to help achieve strategic goals more effectively. Any AI application is only as smart and as efficient as the information it has been fed with. One example of data quality influencing the quality of the overall AI system was observable in 2018 at Amazon. The system was trained on resumes submitted to the company over the previous decade, which were mostly from men. As a result, the algorithm downgraded resumes that contained certain words and phrases that were associated with women, such as “women” or “female”.

When dealing with irrelevant or inaccurately labeled datasets, the application’s abilities become limited. Another reason behind a lack of quality data is linked to organizations’ tendency to collect excessive amounts of data. In this case, data decay is a commonly observed outcome which results from accumulated inconsistencies and redundancies. A potential solution to help organizations access high-quality data suggests practices such as manual tagging, labeling, cleansing and randomization. To improve the quality of data, it is recommended to streamline the collection process, as well as standardize the structure of all the data management processes.

Ⅱ. Governance

- Data governance

Both, quality and maintainability of data are crucial aspects of data governance. With growing concerns around transparency and privacy raised by data collection, more factors come into play. To address these concerns, organizations have to ensure that they can monitor and restrict their AI algorithms, as those need to be properly managed at all stages including data extraction, processing, usage, and storage.

- Cybersecurity

In the face of rising cybercrime, it is more important than ever to address and prevent the risks associated with AI implementation. Data collection as part of the AI initiatives is highly vulnerable to breaches. In an attempt to mitigate the impact of data breaches and preserve the safety of user information, network segmentation is the key. Network segmentation is the technique that divides a network into smaller, distinct subnetworks, each with a designated, unique set of security controls and services.

- Regulatory compliance

As more and more people are becoming concerned with how companies access and use their confidential information, transparent data collection policies can also help alleviate the suspicion related to this process. Legal regulations have been gaining substantial prominence when addressing the risks associated with AI and other data-centric operations. As this subject becomes increasingly concerning for the users, organizations that adopt AI technologies have to evaluate their approach towards compliance policies and other regulations. Legal compliance to these restrictions is especially applicable for those organizations that operate in highly regulated industries such as finance and healthcare. As the field of AI-related regulations becomes trickier to navigate, using the help of third-party auditors appears as the most sensible option.

In late 2022, the EU ministers presented their position on the AI Act, the planned EU law to regulate artificial intelligence. The EU’s approach to artificial intelligence focuses on excellence and trust to strengthen research and industrial capacities and ensure fundamental rights. However, the proposal is already debated critically inside the AI ecosystem.

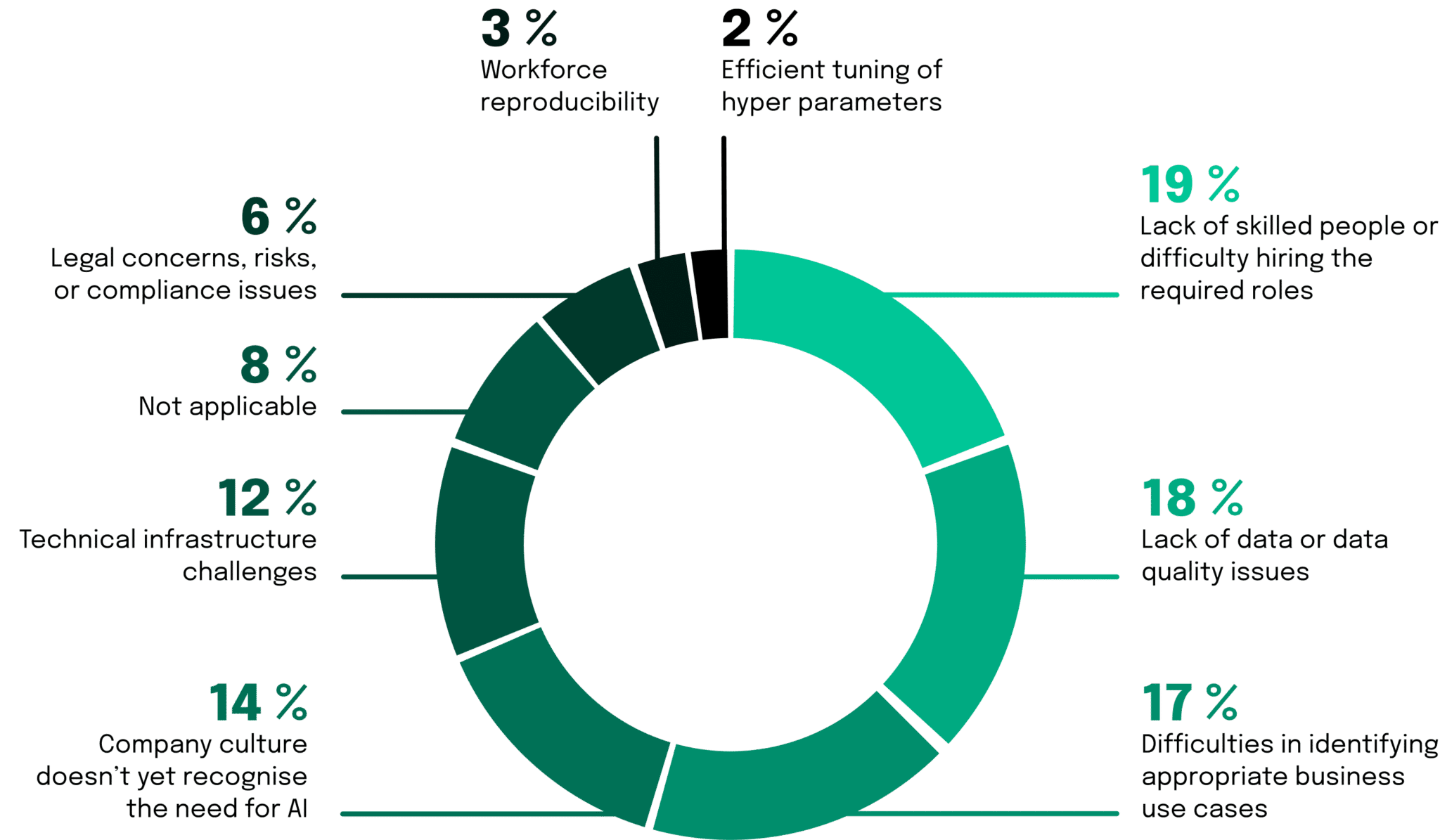

Ⅲ. Workforce readiness & Skills gap

The right skill set is essential to make AI achieve its full level of effectiveness. Yet, according to Juniper’s survey, 73% of organizations reveal that they struggle with preparing and expanding their workforce for a smooth AI integration. A key obstacle at this stage of the digital transformation process is an AI skills gap. In the early phases of AI adoption, many organizations appear hesitant to attract experienced AI professionals, such as machine learning modelers and data scientists, since it is a challenging and expensive task. However, scaling a data team is a crucial step in building the AI model and requires the technical expertise that might be lacking internally. Even with a certain degree of in-house expertise, a lack of proper experience in AI-based technology is a potential hazard that could obstruct the realization of the full-value AI models can offer to organizations. We can see the strong economic potential for the AI companies themselves. For example, the developing company of ChatGPT, OpenAI, are striving for a $30 billion evaluation at the time of us writing the article! To make the transition towards AI-optimized workflow as seamless as possible, the best solution would be to first focus on isolating the skills required for each unique strategic goal. To further drive the digital upskilling experience, finding an expert partner that could provide a team of specialists could be a costly yet extremely valuable investment into the organization’s transformation journey. The right specialists would both help with the training of the current teams to make up for the skills’ shortage, and address the technical pitfalls of AI implementation, such as inefficient processes and integration issues.

Neosfer take

For now, ChatGPT & Co. still look like nice gimmicks. But it doesn’t take much imagination to envision the transformative impact such tools could have for certain industries (e.g., journalism). Nor would it be the first major innovation that looked like a toy at the outset.

If you think about it critically, ChatGPT & Co. are (to put it simply) logical continuations of already existing everyday helpers. First, calculators made it easier for us to perform difficult calculations. Then came DeepL and other tools to relieve us of tedious translation work. Now ChatGPT & Co. follow and simplify e.g. the creation of social media posts, articles, sometimes even economic works or presentations.

Sure, the hype is big right now, but AI assistants like ChatGPT & Co. can also have an impact in financial services. Use cases could be, for example, the simplified creation of complex contracts, the improvement of customer service through “smarter” chat bots, or support in the search for investment decisions. However, it should not be forgotten that these bots are only as good as the data they are fed with, and sometimes “even” ChatGPT passes on incorrect information. What must not be forgotten in all of this is that banks are of course already working with or on ML systems, but they often involve customer data, which makes the whole thing more complex from a regulatory perspective. ChatGPT has an easier time with data bundled from the Internet.

You can also find out more about the impact ChatGPT & Co. could have on financial services in a podcast episode soon or at the upcoming Between the Towers event.

Implementing ethical AI in the future

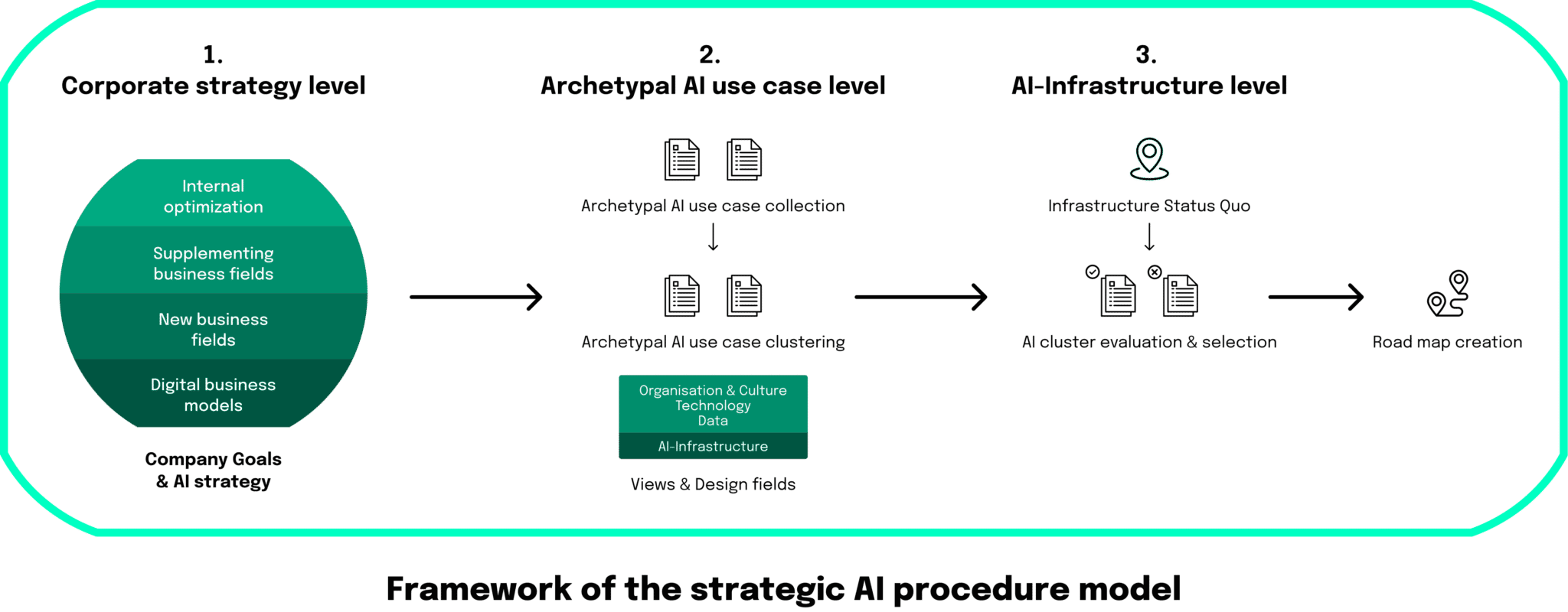

1. Corporate strategy & AI strategy

When evaluating AI as a technology for an organization’s digital transformation, there is a tendency to focus on the technical research rather than the business-strategic research. Studies indicate that the companies that manage to align their roadmap of use cases for AI applications with the bigger picture of their corporate strategy appear to be more successful in generating AI-added value. Given that AI strategy is introduced into the value chain design with the goal of ultimate optimization, it has to resonate with the main strategy defined by an organization to deliver upon that objective. Before implementing AI as a subset of the corporate strategy, it is essential to address a few questions in regard to how it can best maximize the overall value proposition. Some key considerations should include a) assessing the impact of AI adoption on core competencies of a company, as well as b) the elaboration of the new potential competencies it will create. Furthermore, it is important to c) define the main aim of introducing the AI strategy in terms of its effect on growth, profitability, or risk minimization. Overall, when developing the AI approach, the key points to address should include the long-term goal of its implementation and impact across all business fields, essentially tying it together with the corporate strategy.

2. Archetypal AI use case level

The framework suggests that the next phase of the strategic AI model will be focused on composing a collection of archetypal AI use cases. This segment of the model has to be open for continuous development, given that technological progress promotes the variety, opening up the opportunity for consistent additions. Given that each use case is unique in terms of the AI capabilities and synergy of technologies, it is assumed that each use case depends on several variables. Foremost, a company has to define which type of AI is applicable for each use case. To answer that question, they will have to consider its main desired function in terms of assessment, deduction, or reaction. At this stage, it is also important to decide whether the goal of the AI application is to imitate human behavior or generate its own form of rationality. To further break down the use case collection into more specific clusters, other factors to consider include the means of value creation, tackled problems, infrastructure requirements to provide the right input for the expected output. In terms of resources, factors such as time, capacity, and scalability have to be assessed to provide a relevant preselection of use cases. Overall, to reinstate the alignment with the corporate strategy, it is recommended to approach this phase of AI implementation with the idea of interconnectedness in mind — look out for the possible synergies, dependencies, exclusions, and redundancies.

3. AI-architecture level

Executing the digital architecture management for the AI infrastructure level follows a few essential steps. A status quo analysis should be one of these initial steps and will normally include an overview of the existing technological infrastructure and data environment. For the next step, AI infrastructure can be divided into more general views, with each few further composed of several design fields. For instance, some design fields might include cybersecurity, ethics and legal, organizational structure and data governance. These design fields correlate with the previously mentioned clusters of AI use cases and will require conducting an overall value and cost analysis. By taking all this information into consideration, an organization should be able to make a constructive decision about the selection of their AI applications. As the last step of the AI infrastructure management refers to customization and prioritization of the defined use case clusters which will help a company design a clear road map for AI implementation

4. Ethical considerations

Ethical considerations are important for the future development of AI! Our framework does not prioritize ethical considerations behind purely technical or business aspects of the development strategy. But the ethical considerations are rather seen as the final check for the overall roadmap and finalize our holistic framework for actually creating ethical AI.

To develop technologies and methodologies underlying successful AI adoption, it is essential to be mindful of the ethical principles and the components of trustworthy AI.

The 5 prinpicles of ethical AI

To develop technologies and methodologies underlying successful AI adoption, it is essential to be mindful of the ethical principles and the components of trustworthy AI. To pave the way towards ethical and trustworthy AI applications, the European Commission appointed the Artificial Intelligence High-Level Expert Group in 2018. In turn, the AI HLEG created five ethical principles an ethical and trustworthy AI system should follow and be guided by.

1. Principle of Beneficence

First, there is the Principle of Beneficence, which says that the AI system should do good. The AI HLEG proposes that the system should be developed and designed to improve individual and collective wellbeing by contributing towards a higher goal, e.g., the achievement of the UN Sustainable Development Goals that are promoting an ethical and sustainable future.

2. Principle of Non-Maleficence

The second principle is the Principle of Non-Maleficence, which focuses on the aspect that an AI system should protect the dignity, liberty, privacy, safety, and security of all users and all parts of society as well. The principle deeply reflects the idea of social justice for all parts of society.

3. Principle of Autonomy

As a third principle, the AI HLEG list the Principle of Autonomy. So, humans who are interacting with AI must keep full self-determination when being in contact with AI. There should be an opt-in or opt-out option when being exposed to AI.

4. Principle of Justice

The Principle of Justice is the fourth principle the AI HLEG is listing. With an AI system being fair, it ensures that individuals and minority groups maintain free from biases and discrimination. Additionally, the positive and negative results from AI should be distributed evenly throughout different parts of society.

5. Principle of Explicability

The fifth and final principle is the Principle of Explicability, and it speaks about transparency as the key ingredient for building trust in an AI system. Transparency implies that the AI system needs to be auditable, comprehensible, and intelligible by humans.

Adadi, A., & Berrada, M. (2018). Peeking Inside the Black-Box: A Survey on Explainable

Artificial Intelligence (XAI). IEEE Access, 6, 52138–52160.

https://doi.org/10.1109/access.2018.2870052

Bareis, J., & Katzenbach, C. (2021). Talking AI into Being: The Narratives and Imaginaries

of National AI Strategies and Their Performative Politics. Science, Technology,

&Amp; Human Values, 47(5), 855–881. https://doi.org/10.1177/01622439211030007

Cubric, M. (2020). Drivers, barriers and social considerations for AI adoption in business and

management: A tertiary study. Technology in Society, 62, 101257.

https://doi.org/10.1016/j.techsoc.2020.101257

Eitel-Porter, R. (2020). Beyond the promise: implementing ethical AI. AI And Ethics, 1(1),

73–80. https://doi.org/10.1007/s43681-020-00011-6

Floridi, L., & Cowls, J. (2019). A Unified Framework of Five Principles for AI in Society.

Issue 1. https://doi.org/10.1162/99608f92.8cd550d1

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C.,

Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., & Vayena, E. (2018).

AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks,

Principles, and Recommendations. Minds and Machines, 28(4), 689–707.

https://doi.org/10.1007/s11023-018-9482-5

Henry, K. E., Kornfield, R., Sridharan, A., Linton, R. C., Groh, C., Wang, T., Wu, A., Mutlu,

B., & Saria, S. (2022). Human–machine teaming is key to AI adoption: clinicians’

experiences with a deployed machine learning system. Npj Digital Medicine, 5(1).

https://doi.org/10.1038/s41746-022-00597-7

Kruhse-Lehtonen, U., & Hofmann, D. (2020). How to Define and Execute Your Data and AI

Strategy. Harvard Data Science Review. https://doi.org/10.1162/99608f92.a010feeb

Mittelstadt, B. (2019). Principles alone cannot guarantee ethical AI. Nature Machine

Intelligence, 1(11), 501–507. https://doi.org/10.1038/s42256-019-0114-4

O’Sullivan, S., Nevejans, N., Allen, C., Blyth, A., Leonard, S., Pagallo, U., Holzinger, K.,

Holzinger, A., Sajid, M. I., & Ashrafian, H. (2019). Legal, regulatory, and ethical

frameworks for development of standards in artificial intelligence (AI) and

autonomous robotic surgery. The International Journal of Medical Robotics and

Computer Assisted Surgery, 15(1), e1968. https://doi.org/10.1002/rcs.1968

Rodgers, P., & Levine, J. (2014). An investigation into 2048 AI strategies. 2014 IEEE

Conference on Computational Intelligence and Games.

https://doi.org/10.1109/cig.2014.6932920

Saha, S., Gan, Z., Cheng, L., Gao, J., Kafka, O. L., Xie, X., Li, H., Tajdari, M., Kim, H. A.,

& Liu, W. K. (2021). Hierarchical Deep Learning Neural Network (HiDeNN): An

artificial intelligence (AI) framework for computational science and engineering.

Computer Methods in Applied Mechanics and Engineering, 373, 113452.

https://doi.org/10.1016/j.cma.2020.113452

Sun, T. Q., & Medaglia, R. (2019). Mapping the challenges of Artificial Intelligence in the

public sector: Evidence from public healthcare. Government Information Quarterly,

36(2), 368–383. https://doi.org/10.1016/j.giq.2018.09.008

neosfer GmbH

Eschersheimer Landstr 6

60322 Frankfurt am Main

Teil der Commerzbank Gruppe

+49 69 71 91 38 7 – 0 info@neosfer.de presse@neosfer.de bewerbung@neosfer.de