12min reading time

Whether it is on the Daily Show with Trevor Noah, Dall-E 2 that interprets text into pictures, a Twitter chatbot called Tay, or even more controversial Artificial Intelligence (AI) applications like in lethal autonomous weapon systems (LAWS) – AI has the potential to form our daily lives in the near future. The application of AI technologies is driving growth at individual, business, and economic levels. In fact, AI has started to outperform human beings in a range of work activities.

With the global AI market now expected to hit yearly sales of nearly $32 trillion according to McKinsey and Company, the industry is booming and expanding to even broader application fields. Over the last few decades, the evolution of AI has mostly revolved around the advancement of linguistic, mathematical, and logical reasoning abilities. However, the next wave of AI advancements is pushing towards developing emotional intelligence, enabling machines to comprehend the physical world to a certain degree. At the same time we can observe the downsides of using AI. From algorithm biases, discrimination against specific genders or ethnic groups due to learning via various sources, to underlying issues with fairness – all have been identified across various AI applications . Areas in which these issues are present include advertising, AI-based recruiting software, predictive models for the application in healthcare like the ada health chatbot, and facial recognition software, and lead to arising discussions about ethics in AI.

As these developments are only expected to continue to grow, we want to draw your attention to Artificial Intelligence and create a solid and really detailed understanding of these innovations. With this blog article, we intend to create a basic understanding of what AI actually is, talk about the history of AI, search for a holistic definition of AI and to delineate the most important key terms related to AI.

The history of AI

Since Alan Turing posed the famous question “Can machines think?” in the 1950s, the emergence of supposedly intelligent machines could be witnessed. The first half of the 20th century was the first time people saw something like artificial intelligence develop. Remember the “heartless” Tin man from The Wizard of Oz or the humanoid robot that impersonated Maria in Metropolis? Well, one could argue that this can be seen as the earliest form of AI. However, speaking of Turing, there was one thing holding him back from researching AI even more: the cost of computing in the 50s. Leasing a computer was costly, up to $200.000 per month to be exact. But there was one change coming that significantly enhanced the AI landscape.

The proof of concept was initialized in 1956 through Allen Newell, Cliff Shaw, and Herbert Simon’s, Logic Theorist. The Logic Theorist was a program designed to mimic the problem-solving skills of a human. It’s considered by many to be the first artificial intelligence program and was presented at the Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) hosted by John McCarthy and Marvin Minsky. Today, we can agree that this conference was a booster for the next generation of AI research for the following decades to come. After the DSRPAI, computers were more capable, could store and work through more data. Natural Language Processing tools like ELIZA showed first success toward the goals of problem-solving and the interpretation of spoken language respectively. In 1970 Marvin Minsky told Life Magazine, “from three to eight years we will have a machine with the general intelligence of an average human being.” In hindsight this sounds a bit too enthusiastic, and this judgement was contrary to many contemporaries. People argued that computers were just too “weak” to show any kind of intelligence. Hans Moravec, a doctoral student of McCarthy at the time, stated that “computers were still millions of times too weak to exhibit intelligence.” With these statements, it was no surprise that the funding, patience of governments and loss of interest of the public was inevitable, which of course didn’t kick-start an AI development wave.

So, for ten years, there weren’t as many new AI developments until the 1980s. Edward A. Feigenbaum, a researcher from Stanford, introduced expert systems which mimicked the decision-making process of a human expert. After the system learned from a human expert how to respond to a given situation, non-experts could receive advice from that program. From 1982-1990, the second catalyst for AI was the Japanese government. It played a critical role in the further development of AI. With their Fifth Generation Computer Project (FGCP), it poured over $400 million into the development of AI. One of the many goals was revolutionizing computer processing, implementing logic programming, and improving artificial intelligence overall. Even though most of the goals have been missed, the FGCP can be understood as the major accelerator for the future of AI.

Maybe you have heard of Moore’s Law before? It basically describes that storage and computational power doubles every year. This development has finally caught up in the 2000s and even surpassed our expectations with the recent development of quantum computing in 2021 and 2022. Additionally, the broader use of the web and connected devices increased the amount of available data which in return also increased the potential training data sets and application fields for AI.

Ironically, throughout the 2000s and the 2010s, without governmental funding numerous AI innovations were developed and continued into the 2020s. As the fundamental coding hasn’t been changed, what has? It turns out, the underlying limit of computer storage that was holding us back 30 years ago is no longer a problem.

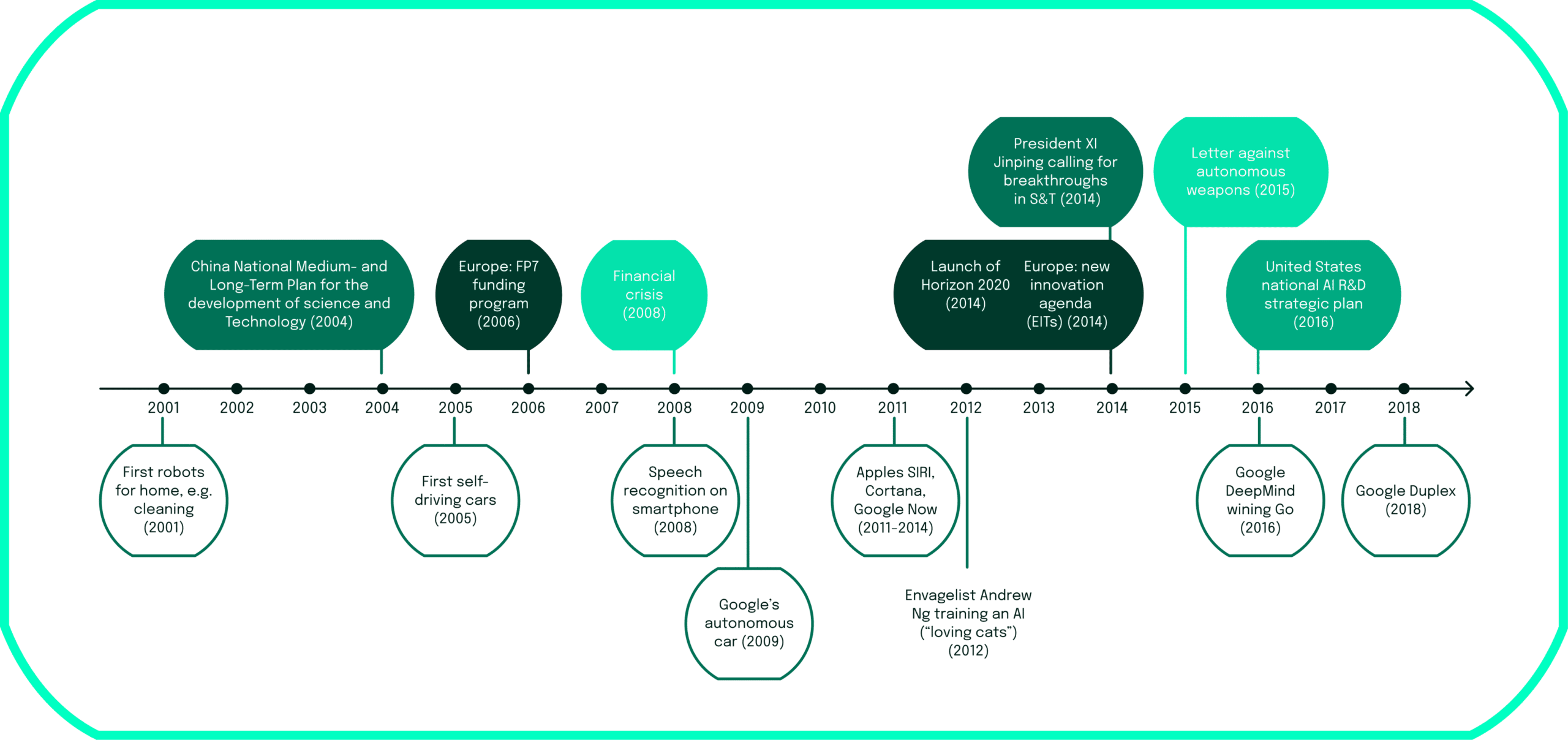

The first developments of Moore’s Law can be found even in 1997 when Garry Kasparov resigned after 19 moves in a game against Deep Blue, a chess-playing computer developed by scientists at IBM. After this point, AI really took off! Because we could probably write a book about the developments in the past 20 years, we decided to create the following graphic and timeline, highlighting some key AI tools and developments.

In 2016 and 2017, a similar match compared to Deep Blue was played. Google’s DeepMind AlphaGo artificial intelligence has defeated multiple Go players in 2061 and the world’s number one player Ke Jie in 2017. Go is known as the most challenging classical game for artificial intelligence. The AI nearly has an infinite amount of possible moves to choose from, and evaluates them using a search tree. Before 2016, an AI system could not handle the sheer number of possible Go moves or evaluate the strength of each possible board position. This highlights the underlying relationship between AI development and Moore’s Law: we saturate the capabilities of AI to the level of our current computational power (computer storage and processing speed), and then wait for Moore’s Law to catch up again.

Of course, one interesting topic to write about is the future of AI – where will technology take us, and how will the future AI systems influence our lives? Essential and even philosophical questions that we cannot answer in one short paragraph. But at least, you already know what kind of articles will follow this one.

Defining Artificial Intelligence

One question that of course always is, what AI actually is and how the technology can be defined. To answer this, sadly we cannot take a linear road as we did with the history of AI. Therefore, we are stating a couple of broader definitions that are present in academic literature and used for practitioners. But as both directions do not paint a holistic picture, we turn to a European Institution to help us out.

Justin Healey defines AI as “[… ]a computer system that can do tasks that humans need intelligence to do”. Wagman writes that AI is “a theoretical psychology that seeks to discover the nature of the versatility and power of the human mind by constructing computer models of intellectual performance in a widening variety of cognitive domains” and a panel of experts similarly describe AI as a “branch of computer science that studies the properties of intelligence by synthesizing intelligence”. There are countless different definitions out there, so we also asked ourselves what to mention – luckily the European Commission is giving us a helping hand here.

Exactly for reasons like this, the EU constructs expert groups on certain topics. For AI, there is the High-Level Expert Group on Artificial Intelligence (AI HLEG). The AI HLEG comes in and creates an in-depth definition of Artificial Intelligence, from which the general working framework of AI can be directly derived. Why do we even mention the HLEG here? Well, they will play an essential part for the second article about adopting ethical and trustworthy AI. The expert group is made up of experts from different industries and disciplines. They work on a set of tasks and goals defined by the European Commission.

The AI HLEG defines AI as “software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal.”.

Derived from the definition of the HLEG, we can derive how AI fundamentally works: AI combines large amounts of data with fast, iterative processing and algorithms to reach a given goal. The software then learns automatically from patterns or features in the given and continuous collected data sets. To first figure out the patterns and features of the data, a training data set is needed to start the adjustment process for the AI system. So, AI is not programmed in a specific way, rather it finds its way around by using the training data set. After finding patterns in the data set, the AI then continuously adapts and develops given the collected real-life data and taking its own previous decisions into account to reach the given, complex goal. We have already written about algorithm bias in the introduction. To prevent exactly these kinds of negative bias, AI learns by, among other things, changing and adjusting its bias values to achieve more accurate results when taking more data into account throughout the ongoing adjustment process.

AI keyword differentiation

Remember the question that Alan Touring asked which we used to kick off the history of AI? The Turing test, proposed by Alan Turing, was designed as a thought experiment that would sidestep the philosophical vagueness of the question “Can a machine think?” A computer passes the test if a human interrogator, after posing some written questions, cannot tell whether the written responses come from a person or computer. Importantly, the computer tool should not be hard-coded, meaning that there should not be data embedded directly into the source code of a program.

During the test, one of the humans acts as the questioner, while the second human and the computer function as respondents to a specific set of questions. The questioner interrogates the respondents within a specific subject area, using a specified format and context. After a preset number of questions, the questioner is then asked to decide which respondent was human and which was a computer. The test is repeated many times. If the questioner makes the correct determination in half or more of the test runs, the computer is considered to have artificial intelligence. Because the questioner regards the computer as “just as human” as the actual human who responded to the test. A more visual explanation can be found here.

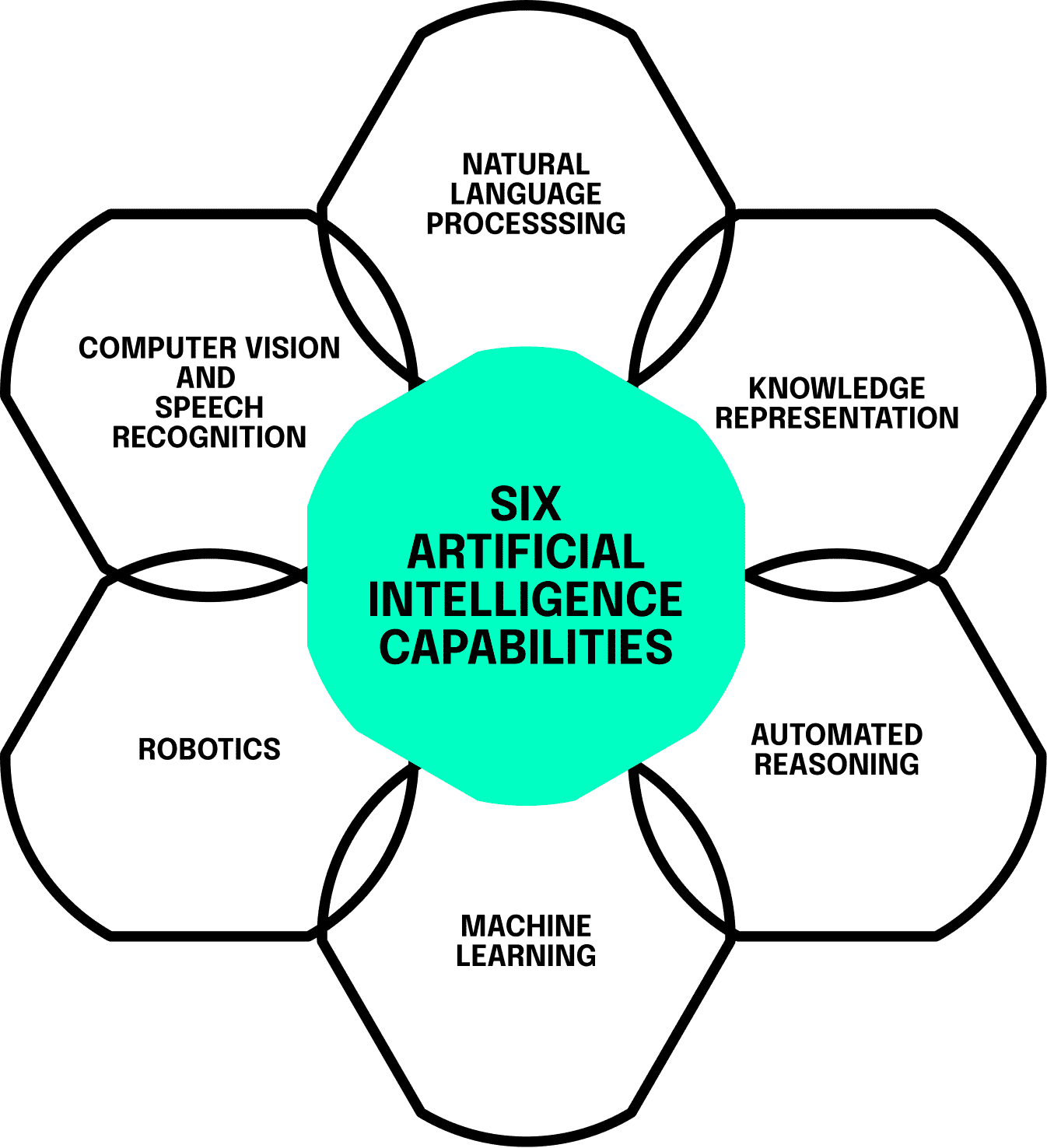

Of course, at the time when Turing defined the test, computers were not as advanced as they are right now. So, some of the following capacities that are listed, at the time, were more of a theoretical thought experiment. For a computer to pass the Turing Test, it would need the following capabilities:

1.natural language processing to communicate successfully in a human language;

2.knowledge representation to store what it knows or hears

3.automated reasoning to answer questions and to draw new conclusions

4.machine learning to adapt to new circumstances and to detect and extrapolate patterns

Turing viewed the physical simulation of a person as unnecessary to demonstrate intelligence. However, other researchers have proposed a total Turing test, which requires interaction with objects and people in the real world. To pass the total Turing test, a robot will need

5.computer vision and speech recognition to perceive the world;

6.robotics to manipulate objects and move around.

These six disciplines compose most of AI. Yet, AI researchers have devoted little effort to passing the Turing test, believing that it is more important to study the underlying principles of intelligence. The quest for “artificial flight” for instance succeeded when engineers and inventors stopped imitating birds and started using wind tunnels and learning about aerodynamics. Aeronautical engineering texts do not define the goal of their field as making “machines that fly so exactly like pigeons that they can fool even other pigeons.” So, it will always be a challenge to exactly replicate human intelligence, as there are various paradigms in place that define human intelligence differently, and overall, human intelligence is not yet completely understood. Rather than focusing solely on the replication of intelligence, it could be beneficial to improve the six AI capabilities defined by the Turing Test, and therefore, slowly move naturally towards the replication of human intelligence.

However, the Turing Test and its deduction of the six AI capabilities, is only something theoretical and historical. As AI is also moving forward, we do not only want to rely on historical deduction of keyword disciplines but also on the current definition of the keyword differentiation. In today’s economy, we can identify more than the six “classical” AI characteristics. A quite broadly accepted differentiation that you can find online is the following:

- Neural Networks – brain modelling, time series prediction, classification

- Evolutionary Computation – genetic algorithms, genetic programming

- Vision – object recognition, image understanding

- Robotics – intelligent control, autonomous exploration

- Expert Systems – decision support systems, teaching systems

- Speech Processing – speech recognition and production

- Natural Language Processing – machine translation

- Planning – scheduling, game playing

- Machine Learning – decision tree learning, version space learning

As with many of the AI technologies, even the differentiation of keywords and areas of AI, this is an ongoing development and due to change with the innovative power currently observable in the academic and professional world.

The key takeaways of this first AI article

So, what are your key takeaways from this article? Well, you should now understand how AI was being developed, remember at least a couple of keywords like Alan Turing and the Turing Test which ultimately define the six different characteristics of AI. You should remember the Fifth Generation Computer Project (FGCP) from 1982 to 1990 acting as a catalyst for future AI developments. Moore’s Law now should be your main reasoning why AI took off in its development starting in the 2000s. Additionally, the definition of AI by the AI HLEG as

“software systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal.”

should give you a better understand of how to describe AI to your friends and work colleagues By remembering the Turing Test, you know that the six different areas of AI are:

- natural language processing

- knowledge representation

- automated reasoning

- machine learning

- computer vision and speech recognition

- robotics

As AI is a fascinating topic and we at neosfer are really passionate about the future potential of AI, this will not be the last article about this topic. In the next one, we turn to the more business-centered side of AI and highlight how companies can implement AI in reality. As we also talked about how we could write a book about AI technologies, there is an article about the future of AI coming your way as well.

Anyoha, R. (2020, April 23). The History of Artificial Intelligence. Science in the News. https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/

Bench-Capon, T., Araszkiewicz, M., Ashley, K., Atkinson, K., Bex, F., Borges, F., Bourcier, D., Bourgine, P., Conrad, J. G., Francesconi, E., Gordon, T. F., Governatori, G., Leidner, J. L., Lewis, D. D., Loui, R. P., McCarty, L. T., Prakken, H., Schilder, F., Schweighofer, E., . . . Wyner, A. Z. (2012). A history of AI and Law in 50 papers: 25 years of the international conference on AI and Law. Artificial Intelligence and Law, 20(3), 215–319. https://doi.org/10.1007/s10506-012-9131-x

Buolamwini, J. (2018, January 21). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. PMLR. https://proceedings.mlr.press/v81/buolamwini18a.html

Datta, A. (2018). Discrimination in Online Personalization: A Multidisciplinary Inquiry. https://escholarship.org/uc/item/4rw2z9f8

Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2019). Artificial intelligence for decision making in the era of Big Data – evolution, challenges and research agenda. International Journal of Information Management, 48, 63–71. https://doi.org/10.1016/j.ijinfomgt.2019.01.021

Healey, J. (2020). Artificial Intelligence (1st ed.). Justin Healey. https://spinneypress.com.au/product/artificial-intelligence/

Hosny, A., Parmar, C., Quackenbush, J., Schwartz, L. H., & Aerts, H. J. W. L. (2018). Artificial intelligence in radiology. Nature Reviews Cancer, 18(8), 500–510. https://doi.org/10.1038/s41568-018-0016-5

Huang, M. H., & Rust, R. T. (2018). Artificial Intelligence in Service. Journal of Service Research, 21(2), 155–172. https://doi.org/10.1177/1094670517752459

Hunkenschroer, A. L., & Luetge, C. (2022). Ethics of AI-Enabled Recruiting and Selection: A Review and Research Agenda. Journal of Business Ethics, 178(4), 977–1007. https://doi.org/10.1007/s10551-022-05049-6

John-Mathews, J. M., Cardon, D., & Balagué, C. (2022). From Reality to World. A Critical Perspective on AI Fairness. Journal of Business Ethics, 178(4), 945–959. https://doi.org/10.1007/s10551-022-05055-8

Kreutzer, R. T., & Sirrenberg, M. (2019). Künstliche Intelligenz verstehen: Grundlagen – Use-Cases – unternehmenseigene KI-Journey (1. Aufl. 2019). Springer Gabler.

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://doi.org/10.1126/science.aax2342

One Hundred Year Study on Artificial Intelligence. (n.d.). One Hundred Year Study on Artificial Intelligence (AI100). https://ai100.stanford.edu/2016-report

Russell, S., & Norvig, P. (1995). Artificial intelligence: a modern approach. Choice Reviews Online, 33(03), 33–1577. https://doi.org/10.5860/choice.33-1577

TURING, A. M. (1950). I.—COMPUTING MACHINERY AND INTELLIGENCE. Mind, LIX(236), 433–460. https://doi.org/10.1093/mind/lix.236.433

Wagman, M. (1999). The Human Mind According to Artificial Intelligence: Theory, Research, and Implications. Praeger Publishers.

Wennker, P. (2020). Künstliche Intelligenz in der Praxis: Anwendung in Unternehmen und Branchen: KI wettbewerbs- und zukunftsorientiert einsetzen (1. Aufl. 2020). Springer Gabler.

neosfer GmbH

Eschersheimer Landstr 6

60322 Frankfurt am Main

Teil der Commerzbank Gruppe

+49 69 71 91 38 7 – 0 info@neosfer.de presse@neosfer.de bewerbung@neosfer.de